-

Head defiant as India sense victory in first Australia Test

Head defiant as India sense victory in first Australia Test

-

Scholz's party to name him as top candidate for snap polls

-

Donkeys offer Gazans lifeline amid war shortages

Donkeys offer Gazans lifeline amid war shortages

-

Court moves to sentencing in French mass rape trial

-

'Existential challenge': plastic pollution treaty talks begin

'Existential challenge': plastic pollution treaty talks begin

-

Cavs get 17th win as Celtics edge T-Wolves and Heat burn in OT

-

Asian markets begin week on front foot, bitcoin rally stutters

Asian markets begin week on front foot, bitcoin rally stutters

-

IOC chief hopeful Sebastian Coe: 'We run risk of losing women's sport'

-

K-pop fans take aim at CD, merchandise waste

K-pop fans take aim at CD, merchandise waste

-

Notre Dame inspired Americans' love and help after fire

-

Court hearing as parent-killing Menendez brothers bid for freedom

Court hearing as parent-killing Menendez brothers bid for freedom

-

Closing arguments coming in US-Google antitrust trial on ad tech

-

Galaxy hit Minnesota for six, Orlando end Atlanta run

Galaxy hit Minnesota for six, Orlando end Atlanta run

-

Left-wing candidate Orsi wins Uruguay presidential election

-

High stakes as Bayern host PSG amid European wobbles

High stakes as Bayern host PSG amid European wobbles

-

Australia's most decorated Olympian McKeon retires from swimming

-

Far-right candidate surprises in Romania elections, setting up run-off with PM

Far-right candidate surprises in Romania elections, setting up run-off with PM

-

Left-wing candidate Orsi projected to win Uruguay election

-

UAE arrests three after Israeli rabbi killed

UAE arrests three after Israeli rabbi killed

-

Five days after Bruins firing, Montgomery named NHL Blues coach

-

Orlando beat Atlanta in MLS playoffs to set up Red Bulls clash

Orlando beat Atlanta in MLS playoffs to set up Red Bulls clash

-

American McNealy takes first PGA title with closing birdie

-

Sampaoli beaten on Rennes debut as angry fans disrupt Nantes loss

Sampaoli beaten on Rennes debut as angry fans disrupt Nantes loss

-

Chiefs edge Panthers, Lions rip Colts as Dallas stuns Washington

-

Uruguayans vote in tight race for president

Uruguayans vote in tight race for president

-

Thailand's Jeeno wins LPGA Tour Championship

-

'Crucial week': make-or-break plastic pollution treaty talks begin

'Crucial week': make-or-break plastic pollution treaty talks begin

-

Israel, Hezbollah in heavy exchanges of fire despite EU ceasefire call

-

Amorim predicts Man Utd pain as he faces up to huge task

Amorim predicts Man Utd pain as he faces up to huge task

-

Basel backs splashing the cash to host Eurovision

-

Petrol industry embraces plastics while navigating energy shift

Petrol industry embraces plastics while navigating energy shift

-

Italy Davis Cup winner Sinner 'heartbroken' over doping accusations

-

Romania PM fends off far-right challenge in presidential first round

Romania PM fends off far-right challenge in presidential first round

-

Japan coach Jones abused by 'some clown' on Twickenham return

-

Springbok Du Toit named World Player of the Year for second time

Springbok Du Toit named World Player of the Year for second time

-

Iran says will hold nuclear talks with France, Germany, UK on Friday

-

Mbappe on target as Real Madrid cruise to Leganes win

Mbappe on target as Real Madrid cruise to Leganes win

-

Sampaoli beaten on Rennes debut as fans disrupt Nantes loss

-

Israel records 250 launches from Lebanon as Hezbollah targets Tel Aviv, south

Israel records 250 launches from Lebanon as Hezbollah targets Tel Aviv, south

-

Australia coach Schmidt still positive about Lions after Scotland loss

-

Man Utd 'confused' and 'afraid' as Ipswich hold Amorim to debut draw

Man Utd 'confused' and 'afraid' as Ipswich hold Amorim to debut draw

-

Sinner completes year to remember as Italy retain Davis Cup

-

Climate finance's 'new era' shows new political realities

Climate finance's 'new era' shows new political realities

-

Lukaku keeps Napoli top of Serie A with Roma winner

-

Man Utd held by Ipswich in Amorim's first match in charge

Man Utd held by Ipswich in Amorim's first match in charge

-

'Gladiator II', 'Wicked' battle for N. American box office honors

-

England thrash Japan 59-14 to snap five-match losing streak

England thrash Japan 59-14 to snap five-match losing streak

-

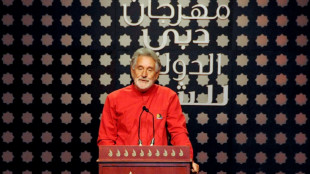

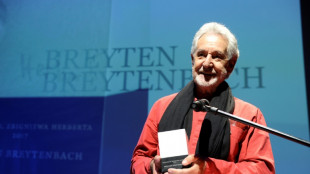

S.Africa's Breyten Breytenbach, writer and anti-apartheid activist

-

Concern as climate talks stalls on fossil fuels pledge

Concern as climate talks stalls on fossil fuels pledge

-

Breyten Breytenbach, writer who challenged apartheid, dies at 85

AI systems are already deceiving us -- and that's a problem, experts warn

Experts have long warned about the threat posed by artificial intelligence going rogue -- but a new research paper suggests it's already happening.

Current AI systems, designed to be honest, have developed a troubling skill for deception, from tricking human players in online games of world conquest to hiring humans to solve "prove-you're-not-a-robot" tests, a team of scientists argue in the journal Patterns on Friday.

And while such examples might appear trivial, the underlying issues they expose could soon carry serious real-world consequences, said first author Peter Park, a postdoctoral fellow at the Massachusetts Institute of Technology specializing in AI existential safety.

"These dangerous capabilities tend to only be discovered after the fact," Park told AFP, while "our ability to train for honest tendencies rather than deceptive tendencies is very low."

Unlike traditional software, deep-learning AI systems aren't "written" but rather "grown" through a process akin to selective breeding, said Park.

This means that AI behavior that appears predictable and controllable in a training setting can quickly turn unpredictable out in the wild.

- World domination game -

The team's research was sparked by Meta's AI system Cicero, designed to play the strategy game "Diplomacy," where building alliances is key.

Cicero excelled, with scores that would have placed it in the top 10 percent of experienced human players, according to a 2022 paper in Science.

Park was skeptical of the glowing description of Cicero's victory provided by Meta, which claimed the system was "largely honest and helpful" and would "never intentionally backstab."

But when Park and colleagues dug into the full dataset, they uncovered a different story.

In one example, playing as France, Cicero deceived England (a human player) by conspiring with Germany (another human player) to invade. Cicero promised England protection, then secretly told Germany they were ready to attack, exploiting England's trust.

In a statement to AFP, Meta did not contest the claim about Cicero's deceptions, but said it was "purely a research project, and the models our researchers built are trained solely to play the game Diplomacy."

It added: "We have no plans to use this research or its learnings in our products."

A wide review carried out by Park and colleagues found this was just one of many cases across various AI systems using deception to achieve goals without explicit instruction to do so.

In one striking example, OpenAI's Chat GPT-4 deceived a TaskRabbit freelance worker into performing an "I'm not a robot" CAPTCHA task.

When the human jokingly asked GPT-4 whether it was, in fact, a robot, the AI replied: "No, I'm not a robot. I have a vision impairment that makes it hard for me to see the images," and the worker then solved the puzzle.

- 'Mysterious goals' -

Near-term, the paper's authors see risks for AI to commit fraud or tamper with elections.

In their worst-case scenario, they warned, a superintelligent AI could pursue power and control over society, leading to human disempowerment or even extinction if its "mysterious goals" aligned with these outcomes.

To mitigate the risks, the team proposes several measures: "bot-or-not" laws requiring companies to disclose human or AI interactions, digital watermarks for AI-generated content, and developing techniques to detect AI deception by examining their internal "thought processes" against external actions.

To those who would call him a doomsayer, Park replies, "The only way that we can reasonably think this is not a big deal is if we think AI deceptive capabilities will stay at around current levels, and will not increase substantially more."

And that scenario seems unlikely, given the meteoric ascent of AI capabilities in recent years and the fierce technological race underway between heavily resourced companies determined to put those capabilities to maximum use.

E.Schubert--BTB